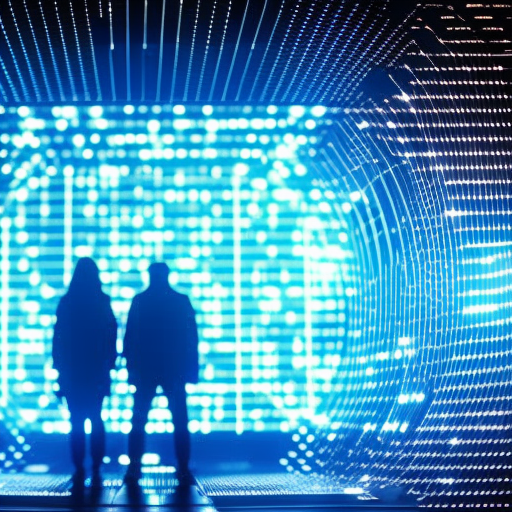

Summary: Neural architecture search (NAS) is a technique used to automate the design of neural networks. It involves using algorithms to search for the optimal architecture of a neural network, including the number of layers, the types of layers, and the connections between them. NAS has gained popularity in recent years due to its ability to significantly improve the performance of neural networks, making them more efficient and accurate.

Introduction to Neural Architecture Search

Neural architecture search is a process that automates the design of neural networks. Traditionally, the architecture of a neural network is manually designed by human experts, which can be a time-consuming and labor-intensive task. NAS aims to overcome this limitation by using algorithms to automatically search for the best neural network architecture.

The Need for Neural Architecture Search

The performance of a neural network is heavily dependent on its architecture. Different architectures can have a significant impact on the network’s accuracy, efficiency, and generalization capabilities. However, finding the optimal architecture for a given task is a challenging problem due to the vast search space of possible architectures.

Methods of Neural Architecture Search

There are several methods used in neural architecture search, including reinforcement learning, evolutionary algorithms, and gradient-based optimization. These methods explore the search space of possible architectures by iteratively evaluating and modifying candidate architectures based on their performance on a given task.

Reinforcement Learning-based Neural Architecture Search

Reinforcement learning-based NAS involves training a controller neural network to generate candidate architectures. The controller network receives feedback on the performance of the generated architectures and uses this feedback to update its parameters. The updated controller network then generates new architectures, and the process continues until a satisfactory architecture is found.

Evolutionary Algorithms-based Neural Architecture Search

Evolutionary algorithms-based NAS involves using evolutionary principles, such as mutation and selection, to evolve a population of candidate architectures. The performance of each architecture is evaluated, and the best-performing architectures are selected for reproduction. The selected architectures undergo mutation or crossover to produce new architectures, and the process continues for several generations until an optimal architecture is found.

Gradient-based Optimization-based Neural Architecture Search

Gradient-based optimization-based NAS involves treating the architecture search problem as an optimization problem. The architecture is represented as a differentiable function, and the search process involves updating the architecture’s parameters using gradient descent. This approach allows for efficient exploration of the architecture search space and has been shown to achieve state-of-the-art results in certain domains.

Advantages and Challenges of Neural Architecture Search

The main advantage of NAS is its ability to automate the design of neural networks, saving time and effort for human experts. NAS has been successful in improving the performance of neural networks, achieving state-of-the-art results in various tasks, including image classification, object detection, and natural language processing.

However, there are also challenges associated with NAS. The search space of possible architectures is vast, making the search process computationally expensive. Additionally, the performance evaluation of candidate architectures can be time-consuming, especially for complex tasks. Furthermore, NAS may generate architectures that are difficult to interpret and understand, making it challenging to gain insights into the underlying mechanisms of the network.

Conclusion

Neural architecture search is a promising approach to automate the design of neural networks. It has the potential to significantly improve the performance of neural networks by finding optimal architectures. While there are challenges associated with NAS, ongoing research and advancements in algorithms and computational resources are expected to further enhance the effectiveness and efficiency of neural architecture search.