Summary:

Generative adversarial networks (GANs) are a type of machine learning model that consists of two neural networks: a generator and a discriminator. The generator creates new data samples, such as images or text, while the discriminator tries to distinguish between real and generated samples. Through an adversarial training process, the generator learns to produce increasingly realistic samples, while the discriminator becomes better at identifying the generated ones. GANs have been successfully applied in various domains, including image synthesis, text generation, and data augmentation.

Introduction:

Generative adversarial networks (GANs) are a class of machine learning models introduced by Ian Goodfellow and his colleagues in 2014. GANs have gained significant attention in the field of artificial intelligence due to their ability to generate realistic data samples. The key idea behind GANs is to train two neural networks simultaneously: a generator and a discriminator. The generator learns to produce new data samples, while the discriminator learns to distinguish between real and generated samples. This adversarial training process leads to the improvement of both networks over time.

How GANs Work:

GANs consist of two main components: the generator and the discriminator. The generator takes random noise as input and produces synthetic data samples, such as images or text. The discriminator, on the other hand, receives both real and generated samples and tries to classify them correctly. The goal of the generator is to produce samples that are indistinguishable from real ones, while the discriminator aims to correctly identify the generated samples.

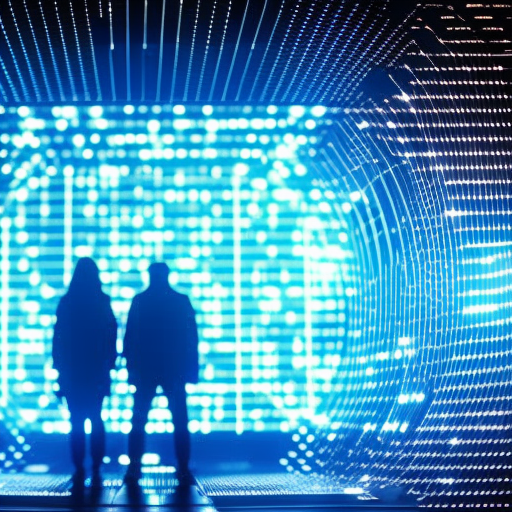

During training, the generator and discriminator play a minimax game. The generator tries to maximize the probability of fooling the discriminator, while the discriminator tries to maximize its accuracy in distinguishing between real and generated samples. This competitive process leads to a dynamic equilibrium, where the generator becomes better at producing realistic samples, and the discriminator becomes more adept at identifying the generated ones.

Applications of GANs:

GANs have found applications in various domains, including image synthesis, text generation, and data augmentation. In image synthesis, GANs have been used to generate realistic images that resemble a given dataset. This has been particularly useful in tasks such as image super-resolution, where GANs can generate high-resolution images from low-resolution inputs.

In the field of text generation, GANs have been used to generate coherent and contextually relevant text. By training the generator on large text corpora, GANs can generate new text samples that mimic the style and content of the training data. This has applications in natural language processing, chatbots, and content creation.

GANs have also been used for data augmentation, a technique that involves generating additional training data to improve the performance of machine learning models. By generating new samples that are similar to the original data, GANs can help overcome the limitations of small datasets and improve the generalization ability of models.

Challenges and Future Directions:

Despite their success, GANs still face several challenges. One major challenge is the instability of training, where the generator and discriminator can get stuck in a suboptimal equilibrium. Researchers have proposed various techniques to stabilize GAN training, such as modifying the loss functions or introducing regularization methods.

Another challenge is the mode collapse problem, where the generator produces a limited variety of samples, ignoring the diversity present in the training data. Addressing this issue is an active area of research, with approaches like Wasserstein GANs and variational autoencoders being developed to encourage diversity in generated samples.

In the future, GANs are expected to continue advancing and finding applications in new domains. Researchers are exploring extensions of GANs, such as conditional GANs, which allow the generation of samples conditioned on specific attributes or inputs. GANs are also being combined with other techniques, such as reinforcement learning, to create more powerful and versatile models.

Conclusion:

Generative adversarial networks (GANs) are a powerful class of machine learning models that have revolutionized the field of data generation. By training a generator and discriminator in an adversarial manner, GANs can produce realistic data samples that resemble the training data. GANs have been successfully applied in various domains, including image synthesis, text generation, and data augmentation. While challenges remain, GANs continue to be an active area of research with promising future directions.