Summary:

Reinforcement learning is a type of machine learning where an agent learns to make decisions by interacting with an environment. It involves the agent taking actions in the environment and receiving feedback in the form of rewards or punishments. Through trial and error, the agent learns to maximize its rewards and improve its decision-making abilities. Reinforcement learning has been successfully applied in various domains, including robotics, game playing, and autonomous vehicles.

Introduction:

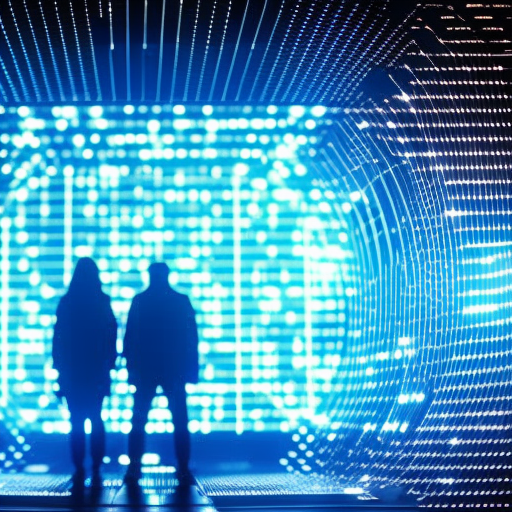

Reinforcement learning is a subfield of machine learning that focuses on how an agent can learn to make decisions by interacting with an environment. Unlike supervised learning, where the agent is provided with labeled examples, or unsupervised learning, where the agent learns patterns without explicit feedback, reinforcement learning relies on trial and error to improve decision-making.

Key Concepts:

Agent: The entity that interacts with the environment and learns from it. The agent takes actions based on its current state and receives feedback in the form of rewards or punishments.

Environment: The external system or world in which the agent operates. It provides the agent with the necessary information and feedback to learn from.

State: The current situation or configuration of the agent and the environment. It represents the information that the agent uses to make decisions.

Action: The choices made by the agent in response to a given state. Actions can have short-term consequences and long-term effects on the agent’s rewards.

Reward: The feedback provided to the agent after taking an action. Rewards can be positive or negative and are used to guide the agent’s learning process.

Policy: The strategy or set of rules that the agent uses to determine its actions based on the current state. The policy can be deterministic or stochastic.

Value Function: A function that estimates the expected cumulative reward an agent will receive from a given state or action. It helps the agent evaluate the long-term consequences of its decisions.

Exploration vs. Exploitation: A trade-off in reinforcement learning between exploring new actions and exploiting the current knowledge to maximize rewards. Exploration allows the agent to discover potentially better actions, while exploitation focuses on using the known best actions.

Algorithm:

Reinforcement learning algorithms typically follow an iterative process. The agent starts with an initial policy and value function. It interacts with the environment, observes the current state, takes an action based on its policy, and receives a reward. The agent then updates its policy and value function based on the observed reward and the new state. This process continues until the agent’s performance converges or reaches a predefined stopping criterion.

Applications:

Reinforcement learning has been successfully applied in various domains. In robotics, it has been used to teach robots to perform complex tasks, such as grasping objects or walking. In game playing, reinforcement learning algorithms have achieved remarkable results, surpassing human performance in games like Go and chess. Autonomous vehicles can also benefit from reinforcement learning by learning to navigate complex environments and make decisions in real-time.

Conclusion:

Reinforcement learning is a powerful approach to machine learning that enables an agent to learn from its interactions with an environment. By maximizing rewards and improving decision-making abilities, reinforcement learning has the potential to revolutionize various fields, from robotics to game playing and autonomous vehicles. As research and advancements continue, reinforcement learning is expected to play an increasingly significant role in shaping the future of artificial intelligence.