Summary:

Deepfake technology refers to the use of artificial intelligence (AI) to create realistic fake videos or images that appear to be genuine. It involves manipulating and superimposing existing images or videos onto source material to create a convincing but fabricated result. While deepfakes can be used for harmless entertainment purposes, they also pose significant risks, such as spreading misinformation, damaging reputations, and facilitating fraud. As the technology continues to advance, there is a growing need for robust detection methods and legal frameworks to address the potential negative consequences.

Understanding Deepfake Technology:

Deepfake technology relies on deep learning algorithms, specifically generative adversarial networks (GANs), to create realistic fake content. GANs consist of two neural networks: a generator that produces the fake content and a discriminator that tries to distinguish between real and fake content. Through an iterative process, the generator learns to create increasingly convincing deepfakes, while the discriminator improves its ability to detect them. This adversarial training process leads to the creation of highly realistic and believable deepfakes.

Applications and Concerns:

Deepfake technology has gained attention due to its potential applications and associated concerns. On the positive side, it can be used in the entertainment industry to create realistic visual effects or to bring deceased actors back to the screen. However, the technology also raises significant concerns. Deepfakes can be used to spread misinformation, create fake news, and manipulate public opinion. They can be particularly damaging when used to create non-consensual explicit content or to impersonate individuals for fraudulent purposes.

Implications for Society:

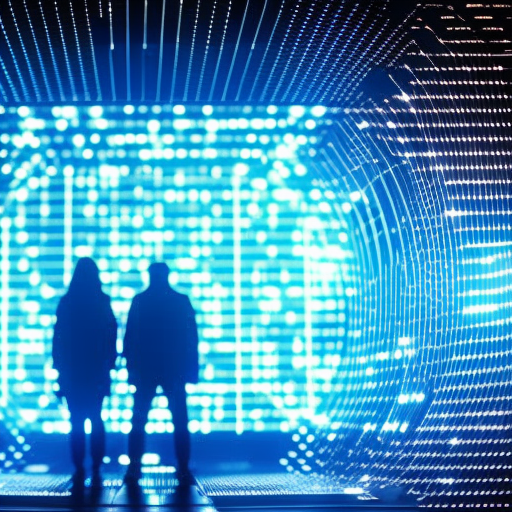

The widespread use of deepfakes has the potential to erode trust in visual media and undermine the credibility of evidence. It becomes increasingly challenging to discern between real and fake content, which can have serious consequences in various domains, including politics, journalism, and criminal investigations. Deepfakes can be used to discredit public figures, manipulate elections, or incite violence. The technology also raises privacy concerns, as anyone can become a target for deepfake manipulation.

Addressing the Challenges:

To mitigate the risks associated with deepfake technology, efforts are being made in several areas. One approach is the development of deepfake detection methods. Researchers are working on algorithms that can analyze videos or images to identify signs of manipulation, such as inconsistencies in facial expressions or unnatural movements. Another approach involves educating the public about the existence and potential dangers of deepfakes, enabling individuals to be more critical consumers of media.

Legal and Ethical Considerations:

The legal and ethical implications of deepfakes are complex and multifaceted. Laws and regulations need to be updated to address the challenges posed by deepfakes, including issues of consent, privacy, and intellectual property. Additionally, there is a need to strike a balance between protecting individuals from harm and preserving freedom of expression. The responsibility to combat deepfakes lies not only with governments and tech companies but also with individuals who must exercise caution and critical thinking when consuming media.

The Future of Deepfake Technology:

As deepfake technology continues to advance, it is crucial to stay ahead of the curve in terms of detection and prevention. Researchers are exploring innovative techniques, such as using blockchain technology to create tamper-proof digital signatures for media content. Collaboration between technology experts, policymakers, and the public is essential to ensure that deepfake technology is used responsibly and ethically, minimizing its potential for harm while maximizing its positive applications.